Our CI system now helps us by giving us a centralized place to manipulate these Docker images: when a CI run is triggered (usually on a Pull Request), the target Docker images are tagged with the git commit SHAs and pushed to our repositories. The Ops team at SpiderOak has had its own effort migrating our services to Kubernetes, and as a result our teams started to deliver their work in Docker images so we could deploy these services more efficiently. Some projects even run quick automatic tests and linters for every single commit pushed in the repository, whether they are part of a Pull Request or not.

This helps us to quickly see the results of the work done by devs, gives quick access to builds that need to be used to (manually) test a feature to our QA team, and in general results in a good consolidation of our planning, development, and testing cycles. Part of the benefit of using so much of the Atlassian stack is that our Jira tickets have the corresponding development branches attached to them, along with the results of the Bamboo tests and builds.

#Spideroak semaphor vs one full#

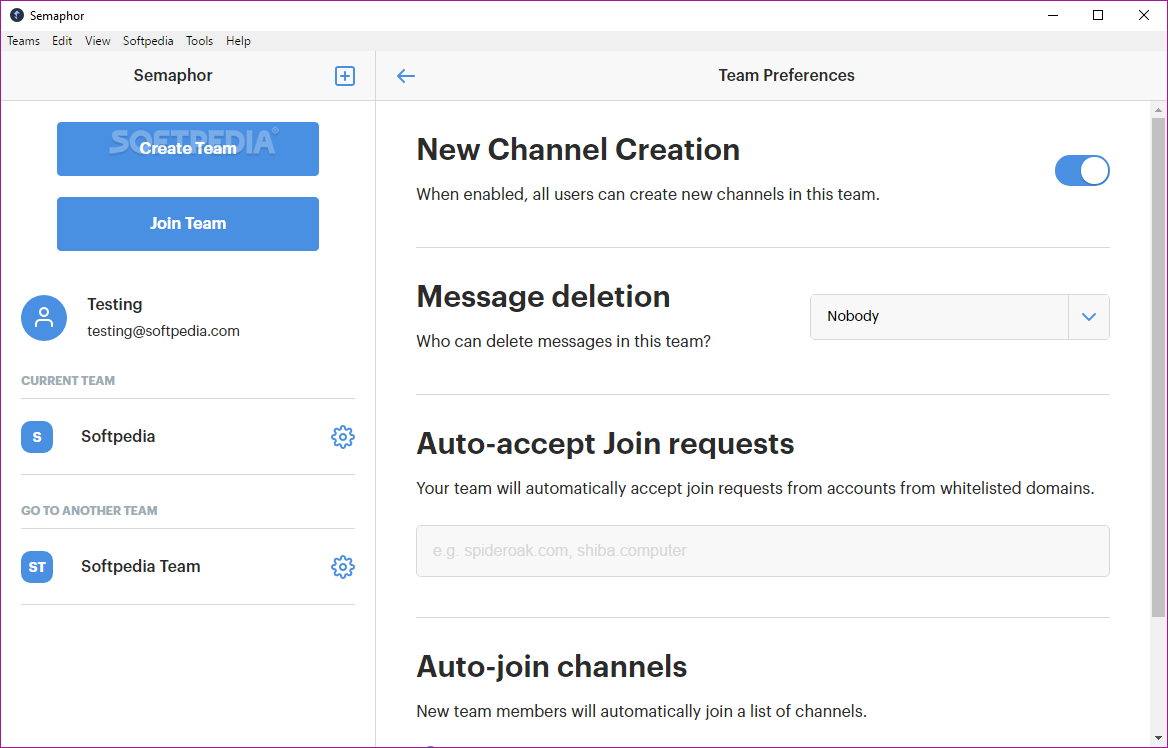

Autotesting policiesĮvery project at SpiderOak has its own autotesting policies, but in general every Pull Request created in a repository triggers a full suite of automatic tests and builds that have to be passing in order to merge the PR. Afterwards we started building new features in our infrastructure. Migrating our basic build systems of our products was a reasonably easy task, and after a month we were already building all of our current products in an automated way in Bamboo. More complex ones build and tag multiple Docker images, orchestrate containers for testing purposes, interact with servers and databases, and even deploy deliverables directly to our website, our Linux repositories and the App and Google Play Stores. Some projects simply required having scripted autotests passing before merging. In the end, due to the transparent integration with the rest of our Atlassian stack (Jira, Bitbucket, and Confluence) and the ease of use of multiple repositories, we went with Bamboo.ĭuring all of 2018 we've been implementing many features and experimenting with different ways of pushing the limits of our Continuous Integration setup, which vary a lot depending on the project. Many excellent options were considered: Jenkins, Travis, CircleCI, TeamCity and CruiseControl among them. We decided it was time for us to migrate to a different CI system that would help us tackle the issues we were having with Buildbot. Finally, the web UI was pretty outdated, since responsive UI was only a beta with many bugs in Buildbot by that time. Since Buildbot config files (that are essentially Python) cover everything from the checkout of each repository to the HTTP server used to display the results, the system became pretty hard to maintain.

#Spideroak semaphor vs one code#

In addition to that, since we now had many different artifacts related to the Semaphor backend that had to be built (the Flow server, many service bots, binaries used specifically for testing), our code for building became bigger and more complex. When new features were being developed across several repositories, attempting to automatically test the right combination of branches proved to be impossible. When we first created Semaphor, more than 20 repositories were part of the build process.

However, as SpiderOak grew, so did our requirements for building and automatic testing: first came Semaphor, then Share appeared too, our repositories started multiplying and things became much harder to handle. Back when SpiderOak ONE was our only product, one single Build Master and a few Build Slaves were enough to automatically handle our build infrastructure. Our build infrastructure used to be powered by Buildbot, an open-source Python-based CI/testing framework with more than 15 years of history. This post will describe the transformation suffered over these last years, and the current status of our build, release, and continuous integration infrastructure. We have covered everything from our repository workflows to the way we deploy our services, artifacts and deliverables both to the public and internally (to our QA team and the staging environments). Over the last few years at SpiderOak we've continuously directed our efforts to improve our Release infrastructure.

0 kommentar(er)

0 kommentar(er)